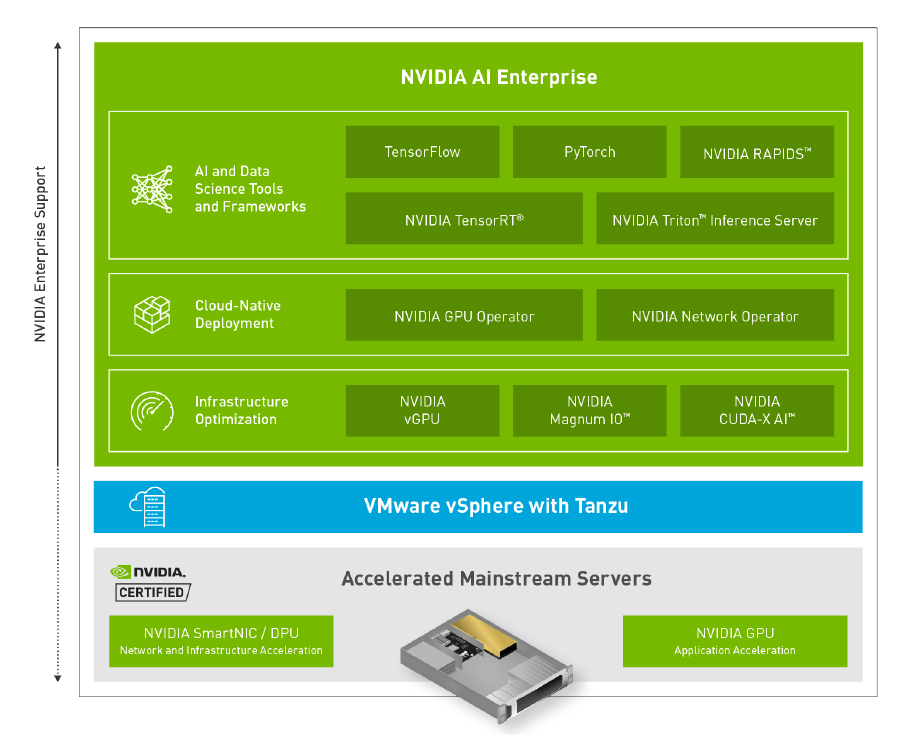

Part 3: Using CUDA Kernel Concurrency and GPU Application Profiling for Optimizing Inference on DRIVE AGX | NVIDIA On-Demand

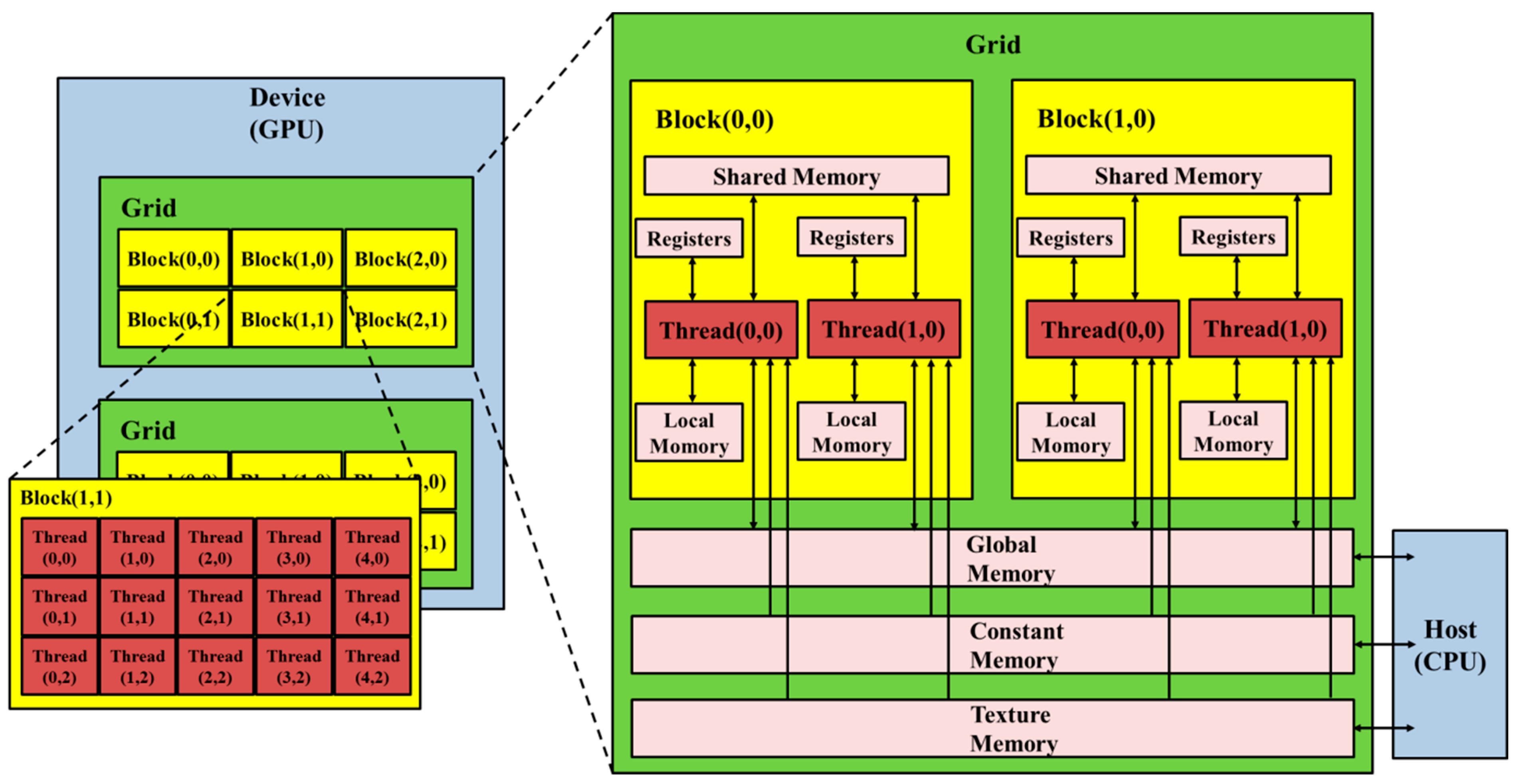

Electronics | Free Full-Text | CUDA-Optimized GPU Acceleration of 3GPP 3D Channel Model Simulations for 5G Network Planning

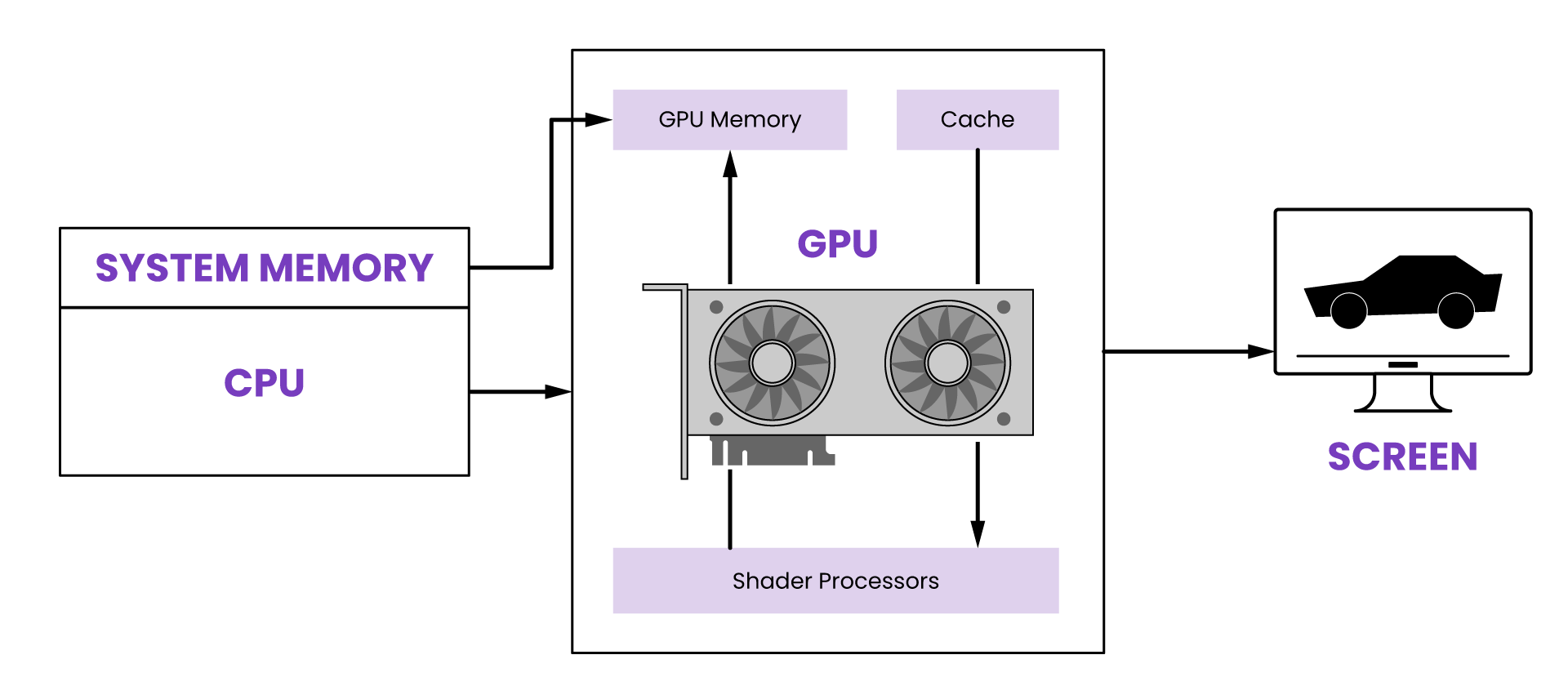

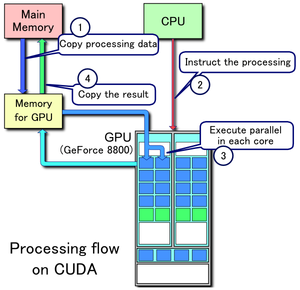

Does Nvidia have a kind of CUDA driver to install for a machine without a GPU? So after installing the CUDA driver on a machine without a GPU, can we debug it